Member-only story

In earlier posts on our blog, we discussed various types of machine learning, including supervised and unsupervised learning. Today, we continue the series by exploring one of the most versatile and widely used machine learning techniques-hybrid, or semi-supervised learning.

In this article, you will learn about how semi-supervised learning works, what its benefits are, and what algorithms to use.

The definition of semi-supervised learning

As you might already know from our previous articles about supervised and unsupervised learning, a machine learning model can use labeled or unlabeled data for training. Supervised learning uses labeled data, while unsupervised learning employs unlabeled data.

Labeled data helps achieve maximum accuracy but it isn’t always possible to have a sufficiently large dataset of manually prepared data. Semi-supervised, or hybrid, learning is a machine learning technique that combines the use of labeled and unlabeled data for training to enhance model performance.

How does semi-supervised learning work?

Hybrid learning allows us to overcome these challenges and operate in the situations where there is not a lot of labeled data available without compromising on quality.

Hybrid learning relies on several principles: self-training, co-training, and multiview learning. Each method can be used to train an efficient ML model.

Self-training

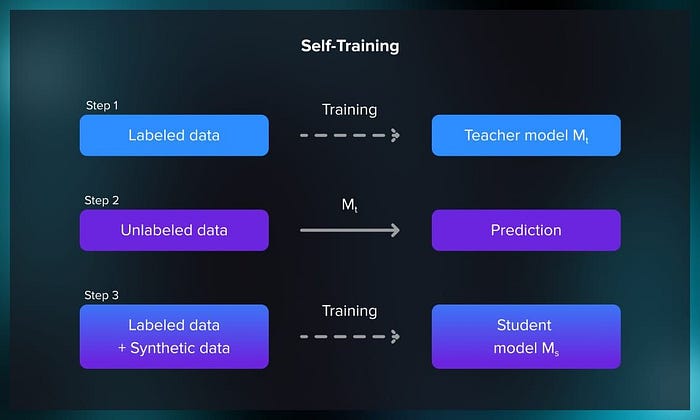

In self-training, a model is initially trained on a small labeled dataset. It then predicts labels for the unlabeled data, and confident predictions are added to the labeled set for subsequent training iterations.

Self-training becomes valuable when there is little labeled data but a lot of unlabeled data. It allows the model to make use of its own predictions on unlabeled instances to…